If you’ve been curious about how transfer learning works, you’re in the right place. I’ll walk you through the idea like a friend at a coffee shop, no wizardry, just practical takes and small, useful examples you can actually use. Transfer learning is the smart shortcut that lets you reuse an existing model’s knowledge so you don’t have to train from scratch.

How transfer learning works, the quick recipe

Here’s the short version. Big models trained on massive datasets learn patterns in stages: early layers capture general features (edges, tones, grammar), and later layers learn task-specific decisions (classify a cat vs. a dog, or identify sentiment). With transfer learning, you keep the useful early parts, swap or add a small head for your task, and fine-tune on your own data. It’s faster, cheaper, and often more accurate for small-to-medium datasets. Nice ROI for devs and product teams. 💡

READ MORE: How Does Model Fine-Tuning Work? Inside the AI Glow-Up Everyone’s Talking About ✨

Why this actually helps you

For folks building real things in the U.S, apps, prototypes, or ML features for startups, transfer learning is a game-changer. Instead of burning cloud credits training from zero, you can:

- Build image classifiers for niche products using a few hundred pics.

- Customize language models (chatbots, customer support) with a modest dataset.

- Edge-deploy models that were once only possible with big servers.

This approach reduces time-to-market and makes ML accessible to smaller teams without a PhD or a supercomputer.

Tips, gotchas, and quick fixes

- Pick a close pretrained model. If you’re working with medical scans, a model trained on natural photos may struggle. Find domain-adjacent weights if possible.

- Watch for bias. Pretrained models reflect their training data: test across diverse samples before shipping.

- Use data augmentation. When your dataset’s small, synthetic variety (rotations, noise, paraphrases) helps prevent overfitting.

- Fine-tune slowly. Unfreeze layers gradually and use a low learning rate for stable results.

READ MORE: OpenAI Data Breach: What the Mixpanel Hack Means for You 😮

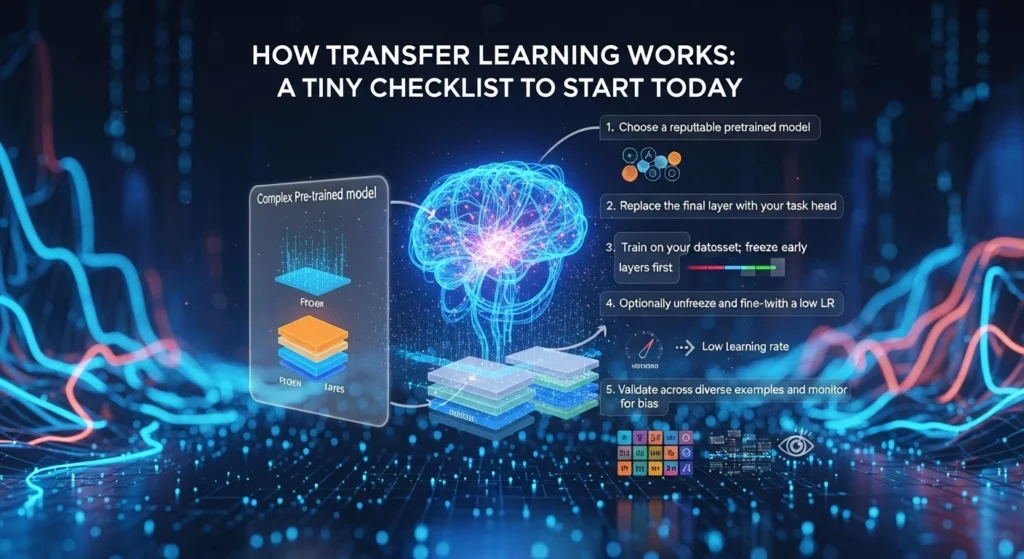

A tiny checklist to start today

- Choose a reputable pretrained model (ImageNet, BERT, etc.).

- Replace the final layer with your task head.

- Train on your dataset; freeze early layers first.

- Optionally unfreeze and fine-tune with a low LR.

- Validate across diverse examples and monitor for bias.

Final thought — why I like it

Transfer learning feels like smart recycling: grab proven building blocks, adapt them, and focus effort where it matters. It’s practical, human-friendly, and fits well with iterative product development. If you want to prototype an ML feature this week, transfer learning is your best bet. Try it, tweak it, and surprise yourself. ✨

READ MORE: Project Prometheus: Jeff Bezos Game-Changing AI Breakthrough

One thought on “How Transfer Learning Works: A Simple, Clever Shortcut for Real ML Problems 🚀”